I’d pay extra for no AI in any of my shit.

I would already like to buy a 4k TV that isn’t smart and have yet to find it. Please don’t add AI into the mix as well :(

Look into commercial displays

The simple trick to turn a “smart” TV into a regular one is too cut off its internet access.

Except it will still run like shit and may send telemetry via other means to your neighbors same brand TV

I’ve never heard of that. Do you have a source on that? And how would it run like shit if you’re using something like a Chromecast?

I don’t know about the telemetry, but my smart tv runs like shit after being on for a few hours. Only a full power cycle makes it work properly again.

Mine still takes several seconds to boot android TV just so it can display the HDMI input, even if not connected to internet. It has to be always plugged on the power because if there is a power cut, it needs to boot android TV again.

My old dumb TV did that in a second without booting an entire OS. Next time I need a big screen, it will be a computer monitor.

I got a roku tv and i don’t even know what that means cuz my tele will never see the outside world

Still uses the shitty ‘smart’ operating system to handle inputs and settings.

I just bought a commercial display directly from the Bengal stadium. Still has Wi-Fi.

I was just thinking the other day how I’d love to “root” my TV like I used to root my phones. Maybe install some free OS instead

You can if you have a pre-2022 LG TV. It’s more akin to jailbreaking since you can’t install a custom OS, but it does give you more control.

We got a Sceptre brand TV from Walmart a few years ago that does the trick. 4k, 50 inch, no smart features.

All TVs are dumb TVs if they have no internet access

I just disconnected my smart TV from the internet. Nice and dumb.

Still slow UI.

If only signage displays would have the fidelity of a regular OLED consumer without the business-usage tax on top.What do you use the UI for? I just turn my TV on and off. No user interface needed. Only a power button on the remote.

Even switching to other stuff right after the boot (because the power-on can’t be called a simple power-on anymore) the tv is slow.

I recently had the pleasure of interacting with a TV from ~2017 or 2018. God was it slow. Especially loading native apps (Samsung 50"-ish TV)I like my chromecast. At least that was properly specced. Now if only HDMI and CEC would work like I’d like to :|

Signage TVs are good for this. They’re designed to run 24/7 in store windows displaying advertisements or animated menus, so they’re a bit pricey, and don’t expect any fancy features like HDR, but they’ve got no smarts whatsoever. What they do have is a slot you can shove your own smart gadget into with a connector that breaks oug power, HDMI etc. which someone has made a Raspberry Pi Compute Module carrier board for, so if you’re into, say, Jellyfin, you can make it smart completely under your own control with e.g. libreELEC. Here’s a video from Jeff Geerling going into more detail: https://youtu.be/-epPf7D8oMk

Alternatively, if you want HDR and high refresh rates, you’re okay with a smallish TV, and you’re really willing to splash out, ASUS ROG makes 48" 4K 10-bit gaming monitors for around $1700 US. HDMI is HDMI, you can plug whatever you want into there.

I don’t have a TV, but doesn’t a smart TV require internet access? Why not just… not give it internet access? Or do they come with their own mobile data plans now meaning you can’t even turn off the internet access?

They continually try to get ob the Internet, it’s basically malware at this point. The on board SoC is also usually comically underpowered so the menus stutter.

I never needed a TV, but now I for sure am not getting one.

IDK why people are downvoting you, I am sure you’re not alone with that sentiment.

A lot of TVs are requiring an account login before being able to use it.

OK, that’s really fucked. What the hell? Wait a moment… that means they could turn the use of the TV into a subscription at any time! That’s crazy…

I’m sure that’s coming up.

As a yearly fee for DRMd televisions that require Internet access to work at all maybe

Right now it’s easier to find projectors without it and a smart os. Before long tho it’s gonna be harder to find those without a smart os and AI upscaling

The dedicated TPM chip is already being used for side-channel attacks. A new processor running arbitrary code would be a black hat’s wet dream.

It will be.

IoT devices are already getting owned at staggering rates. Adding a learning model that currently cannot be secured is absolutely going to happen, and going to cause a whole new large batch of breaches.

The “s” in IoT stands for “security”

Do you have an article on that handy? I like reading about side channel and timing attacks.

That’s insane. How can they be doing security hardware and leave a timing attack in there?

Thank you for those links, really interesting stuff.

It’s not a full CPU. It’s more limited than GPU.

That’s why I wrote “processor” and not CPU.

A processor that isn’t Turing complete isn’t a security problem like the TPM you referenced. A TPM includes a CPU. If a processor is Turing complete it’s called a CPU.

Is it Turing complete? I don’t know. I haven’t seen block diagrams that show the computational units have their own cpu.

CPUs also have co processer to speed up floating point operations. That doesn’t necessarily make it a security problem.

I would pay for AI-enhanced hardware…but I haven’t yet seen anything that AI is enhancing, just an emerging product being tacked on to everything they can for an added premium.

In the 2010s, it was cramming a phone app and wifi into things to try to justify the higher price, while also spying on users in new ways. The device may even a screen for basically no reason.

In the 2020s, those same useless features now with a bit of software with a flashy name that removes even more control from the user, and allows the manufacturer to spy on even further the user.It’s like rgb all over again.

At least rgb didn’t make a giant stock market bubble…

Anything AI actually enhanced would be advertising the enhancement not the AI part.

My Samsung A71 has had devil AI since day one. You know that feature where you can mostly use fingerprint unlock but then once a day or so it ask for the actual passcode for added security. My A71 AI has 100% success rate of picking the most inconvenient time to ask for the passcode instead of letting me do my thing.

DLSS and XeSS (XMX) are AI and they’re noticably better than non-hardware accelerated alternatives.

Already had that Google thingy for years now. The USB/nvme device for image recognition. Can’t remember what it’s called now. Cost like $30.

Edit: Google coral TPU

I use it heavily at work nowadays. It would be nice to run it locally.

You don’t need AI enhanced hardware for that, just normal ass hardware and you run AI software on it.

But you can run more complex networks faster. Which is what I want.

Maybe I’m just not understanding what AI-enabled hardware is even supposed to mean

It’s hardware specifically designed for running AI tasks. Like neural networks.

An NPU, or Neural Processing Unit, is a dedicated processor or processing unit on a larger SoC designed specifically for accelerating neural network operations and AI tasks. Unlike general-purpose CPUs and GPUs, NPUs are optimized for a data-driven parallel computing, making them highly efficient at processing massive multimedia data like videos and images and processing data for neural networks

https://github.com/huggingface/candle

You can look into this, however it’s not what this discussion is about

An NPU, or Neural Processing Unit, is a dedicated processor or processing unit on a larger SoC designed specifically for accelerating neural network operations and AI tasks.

Exactly what we are talking about.

Stick to the discussion of paying a premium for hardware not the software

Not sure what you mean? The hardware runs the software tasks more efficiently.

The discussion is whether people should/would pay extra for hardware designed around ai vs just getting better hardware

I’m curious what you use it for at work.

I’m a programmer so when learning a new framework or library I use it as an interactive docs that allows follow up questions.

I also use it to generate things like regex and SQL queries.

It’s also really good at refactoring code and other repetitive tasks like that

it does seem like a good translator for the less human readable stuff like regex and such. I’ve dabbled with it a bit but I’m a technical artist and haven’t found much use for it in the things I do.

Not the guy you were asking but it’s great for writing powershell scripts

I’m generally opposed to anything that involves buying new hardware. This isn’t the 1980s. Computers are powerful as fuck. Stop making software that barely runs on them. If they can’t make ai more efficient then fuck it. If they can’t make game graphics good without a minimum of a $1000 gpu that produces as much heat as a space heater, maybe we need to go back to 2000s era 3d. There is absolutely no point in making graphics more photorealistic than maybe Skyrim. The route they’re going is not sustainable.

The point of software like DLSS is to run stuff better on computers with worse specs than what you’d normally need to run a game as that quality. There’s plenty of AI tech that can actually improve experiences and saying that Skyrim graphics are the absolute max we as humanity “need” or “should want” is a weird take ¯\_(ツ)_/¯

The quality of games has dropped a lot, they make them fast and as long as it can just about reach 60fps at 720p they release it. Hardware is insane these days, the games mostly look the same as they did 10 years ago (Skyrim never looked amazing for 2011. BF3, Crysis 2, Forza, Arkham City etc. came out then too), but the performance of them has dropped significantly.

I don’t want DLSS and I refuse to buy a game that relies on upscaling to have any meaningful performance. Everything should be over 120fps at this point, way over. But people accept the shit and buy the games up anyway, so nothing is going to change.

The point is, we would rather have games looking like Skyrim with great performance vs ‘4K RTX real time raytracing ultra AI realistic graphics wow!’ at 60fps.

The quality of games has dropped a lot, they make them fast

Isn’t the public opinion that games take way too long to make nowadays? They certainly don’t make them fast anymore.

As for the rest, I also can’t really agree. IMO, graphics have taken a huge jump in recent years, even outside of RT. Lighting, texture quality shaders, as well as object density and variety have been getting a noticeable bump. Other than the occasional dud and awful shader compilation stutter that has plagued many PC games over the last few years (but is getting more awareness now) I’d argue that game performance is pretty good for most games right now.

That’s why I see techniques like DLSS/FSR/XeSS/TSR not as crutch, but as just as one of the dozen other rendering shortcuts game engines have accumulated over the years. That said, it’s not often we see a new technique deliver such a big performance boost while having almost no visual impact.

Also, who decided that ‘we’ would rather have games looking like Skyrim? While I do like high FPS very much, I also do like shiny graphics with all the bells and whistles. A Game like ‘The Talos Principle 2’ for example does hammer the GPU quite a bit on its highest settings, but it certainly delivers in the graphics department. So much so that I’ve probably spent as much time admiring the highly detailed environments as I did actually solving the puzzles.

Isn’t the public opinion that games take way too long to make nowadays? They certainly don’t make them fast anymore.

I think the problem here is that they announce them way too early, so people are waiting like 2-3 years for it. It’s better if they are developed behind the scenes and ‘surprise’ announced a few months prior to launch.

Graphics have advanced of course, but it’s become diminishing returns and now a lot of games have resorted to spamming post-processing effects and implementing as much foliage and fog as possible to try and make the games look better. I always bring Destiny 2 up in this conversation, because the game looks great, runs great and the graphical fidelity is amazing - no blur but no rough edges. Versus like any UE game which have terrible TAA, if you disable it then everything is jagged and aliased.

DLSS etc are defo a crutch and they are designed as one (originally for real-time raytracing), hence the better versions requiring new hardware. Games shouldn’t be relying on them and their trade-offs are not worth it if you have average modern hardware where the games should just run well natively.

It’s not so much us wanting specifically Skyrim, maybe that one guy, but just an extreme example I guess to put the point across. It’s obviously all subjective, making things shiny obviously attracts peoples eyes during marketing.

I see. That I can mostly agree with. I really don’t like the temporal artifacts that come with TAA either, though it’s not a deal-breaker for me if the game hides it well.

A few tidbits I’d like to note though:

they announce them way too early, so people are waiting like 2-3 years for it.

Agree. It’s kind of insane how early some games are being announced in advance. That said, 2-3 years back then was the time it took for a game to get a sequel. Nowadays you often have to wait an entire console-cycle for a sequel to come out instead of getting a trilogy of games on during one.

Games shouldn’t be relying on them and their trade-offs are not worth it

Which trade-offs are you alluding to? Assuming a halfway decent implementation, DLSS 2+ in particular often yields a better image quality than even native resolution with no visible artifacts, so I turn it on even if my GPU can handle a game just fine, even if just to save a few watts.

Which trade-offs are you alluding to? Assuming a halfway decent implementation, DLSS 2+ in particular often yields a better image quality than even native resolution with no visible artifacts, so I turn it on even if my GPU can handle a game just fine, even if just to save a few watts.

Trade-offs being the artifacts, while not that noticable to most, I did try it and anything in fast motion does suffer. Another being the hardware requirement. I don’t mind it existing, I just don’t think mid-high end setups should ever have to enable it for a good experience (well, what I personally consider a good experience :D).

We should have stopped with Mario 64. Everything else has been an abomination.

Only 7% say they would pay more, which to my mind is the percentage of respondents who have no idea what “AI” in its current bullshit context even is

Or they know a guy named Al and got confused. ;)

Maybe I’m in the minority here, but I’d gladly pay more for Weird Al enhanced hardware.

Hardware breaks into a parody of whatever you are doing

Me - laughing and vibing

A man walks down the street He says why am I short of attention Got a short little span of attention And woe my nights are so long

I figure they’re those “early adopters” who buy the New Thing! as soon as it comes out, whether they need it or not, whether it’s garbage or not, because they want to be seen as on the cutting edge of technology.

I am generally unwilling to pay extra for features I don’t need and didn’t ask for.

raytracing is something I’d pay for even if unasked, assuming they meaningfully impact the quality and dont demand outlandish prices.

And they’d need to put it in unasked and cooperate with devs else it won’t catch on quickly enough.

Remember Nvidia Ansel?

We’re not gonna make it, are we? People, I mean.

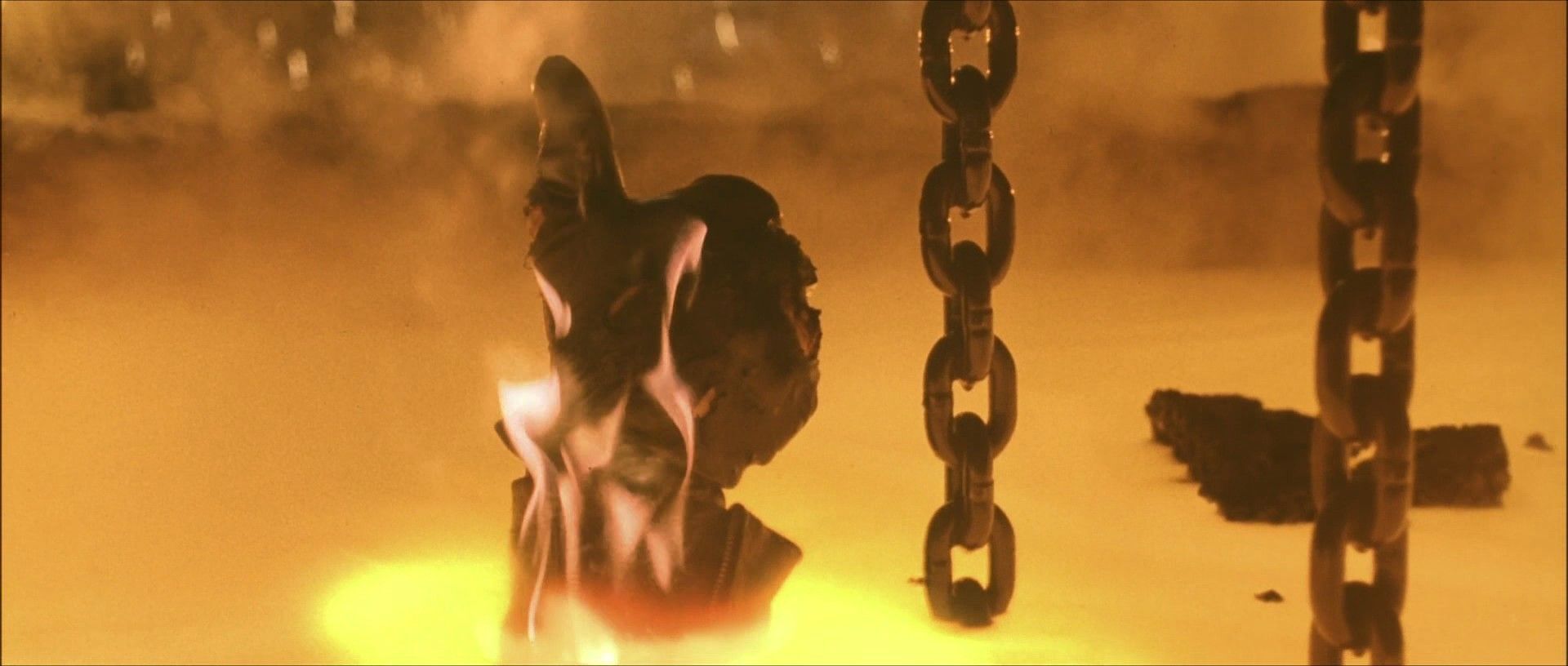

Didn’t John Connor befriend the second IA he find?

yeah but it didn’t try to lock him into a subscription plan or software ecosystem

It locked him into the world of the terminators? Imo a mighty subscription

/j

yeah but it didn’t try to lock him into a subscription plan or software ecosystem

Not AI fault, the first one (killed) was a remotely controlled by the product of a big corp (Skynet), the other one was a local, offline one.

Moral of the story: there’s difference between the AI that runs locally on your GPU and the one that runs on Elon’s remote servers… and that difference may be life or death.

I was recently looking for a new laptop and I actively avoided laptops with AI features.

Look, me too, but, the average punter on the street just looks at AI new features and goes OK sure give it to me. Tell them about the dodgy shit that goes with AI and you’ll probably get a shrug at most

Let me put it in lamens terms… FUCK AI… Thanks, have a great day

FYI the term is “layman’s”, as of you were using the language of a layman, or someone who is not specifically experienced in the topic.

Sounds like something a lameman would say

Well, when life hands you lémons…

The biggest surprise here is that as many as 16% are willing to pay more…

Acktually it’s 7% that would pay, with the remainder ‘unsure’

I mean, if framegen and supersampling solutions become so good on those chips that regular versions can’t compare I guess I would get the AI version. I wouldn’t pay extra compared to current pricing though

What does AI enhanced hardware mean? Because I bought an Nvidia RTX card pretty much just for the AI enhanced DLSS, and I’d do it again.

When they start calling everything AI, soon enough it loses all meaning. They’re gonna have to start marketing things as AI-z, AI 2, iAI, AIA, AI 360, AyyyAye, etc. Got their work cut out for em, that’s for sure.

Instead of Nvidia knowing some of your habits, they will know most of your habits. $$$.

Just saying, I’d welcome some competition from other players in the industry. AI-boosted upscaling is a great use of the hardware, as long as it happens on your own hardware only.

Who in the heck are the 16%

-

The ones who have investments in AI

-

The ones who listen to the marketing

-

The ones who are big Weird Al fans

-

The ones who didn’t understand the question

I would pay for Weird-Al enhanced PC hardware.

Those Weird Al fans will be very disappointed

- The nerds that care about privacy but want chatbots or better autocomplete

-

I’m interested in hardware that can better run local models. Right now the best bet is a GPU, but I’d be interested in a laptop with dedicated chips for AI that would work with pytorch. I’m a novice but I know it takes forever on my current laptop.

Not interested in running copilot better though.

Maybe people doing AI development who want the option of running local models.

But baking AI into all consumer hardware is dumb. Very few want it. saas AI is a thing. To the degree saas AI doesn’t offer the privacy of local AI, networked local AI on devices you don’t fully control offers even less. So it makes no sense for people who value convenience. It offers no value for people who want privacy. It only offers value to people doing software development who need more playground options, and I can go buy a graphics card myself thank you very much.

I would if the hardware was powerful enough to do interesting or useful things, and there was software that did interesting or useful things. Like, I’d rather run an AI model to remove backgrounds from images or upscale locally, than to send images to Adobe servers (this is just an example, I don’t use Adobe products and don’t know if this is what Adobe does). I’d also rather do OCR locally and quickly than send it to a server. Same with translations. There are a lot of use-cases for “AI” models.

I don’t think the poll question was well made… “would you like part away from your money for…” vaguely shakes hand in air “…ai?”

People is already paying for “ai” even before chatGPT came out to popularize things: DLSS

Okay, but here me out. What if the OS got way worse, and then I told you that paying me for the AI feature would restore it to a near-baseline level of original performance? What then, eh?

I already moved to Linux. Windows is basically doing this already.

One word. Linux.

I’m willing to pay extra for software that isn’t