I have a couple of home server, both with Proxmox as hypervisor, one VM with Ubuntu 22.04 that does just Docker containers, one with Open Media Vault, one with Home Assistant (HA OS) and a couple of Windows VM to do some tests. Since I wanted to move from OMV, right now I see 2 options:

- stay with Proxmox and find another NAS OS

- use Unraid as NAS and hypervisor

What other option would you suggest?

I’ve been using unraid for a few years. Super happy with it. Recently migrated from using their normal array to zfs since I got a hold of some enterprise SAS drives.

Been using unraid for a couple of years now also, and really enjoying it.

Previously I was using ESXi and OMV, but I like how complete Unraid feels as a solution in itself.

I like how Unraid has integrated support for spinning up VMs and docker containers, with UI integration for those things.

I also like how Unraid’s fuse filesystem lets me build an array from disks of mismatched capacities, and arbitrarily expand it. I’m running two servers so I can mirror data for backup, and it was much more cost effective that I could keep some of the disks I already had rather than buy all-new.

Fuse file system? I’ve never heard about it. Is it a proper file system or does it work on ZFS/others?

The clue with Unraid is in the name. The goal was all about having a fileserver with many of the benefits of RAID, but without actually using RAID.

For this purpose, Fuse is a virtual filesystem which brings together files from multiple physical disks into a single view.

Each disk in an Unraid system just uses a normal single-disk filesystem on the disk itself, and Unraid distributes new files to whichever disk has space, yet to the user they are presented as a single volume (you can also see raw disk contents and manually move data between disks if you want to - the fused view and raw views are just different mounts in the filesystem)

This is how Unraid allows for easily adding new drives of any size without a rebuild, but still allows for failure of a single disk by having a parity disk - as long as the parity is at least as large as the biggest data disk.

Unraid have also now added ZFS zpool capability and as a user you have the choice over which sort of array you want - Unraid or ZFS.

Unraid is absolutely not targeted at enterprise where a full RAID makes more sense. It’s targeted at home-lab type users, where the ease of operation and ability to expand over time are selling points.

So it’s like SnapRaid and MergerFS. Got it. Thanks

Not sure if it’s obvious from this comment, but also worth pointing out to folks learning about unraid that it still has parity drives that let you recover from disk failures - it’s not just JBOD.

Yup, my comment mentions the parity disk :)

Good to emphasise that a bit more though.

Bruh my reading comprehension…

FUSE is a “virtual” filesystem that can be used to make anything look like a local filesystem.

Example, encfs uses it to make an encrypted file tree appear decrypted to the user and performs encryption/decryption as needed on the fly.

Another example, Borg Backup uses it to let you browse backup snapshots as normal files even when they’ve been compressed, encrypted and/or deduplicated with other snapshots.

The Fuse file system isn’t the actual file system on each disk, it’s like an overlay that brings together the file systems on each disk. Like a super basic setup assuming you had two data drives is that you could have a share called “MyFile Share” and have two files in the share, “File A” located at /mnt/disk1/MyFileShare/FileA and “File B” located at /mnt/disk2/MyFileShare/FileB but if you connect to the file share remotely or do an ls on /mnt/user/MyFileShare/ you’ll see both files A and B. So you create each share and it’ll distribute all your files across disks according to your specifications.

This is the fuse file system, and it’s how UnRAID implements the “RAID-like” features. Because unlike actual RAID your files aren’t striped across the array, each file lives on one disk. So while you can have 1 or 2 parity drives that can rebuild your array in the case of a lost drive, unlike RAID you don’t lose your entire array. If you have one parity drive and a 5 disk array and lose two data drives, your parity can’t rebuild the lost data but the data that’s on the other 3 disks are still accessible.

What RAIDz are you using? How are you feeling it?

raidz1. No issues so far. I’ve had some prior experience with zfs from work, so moving to it was a no brainer.

I use proxmox with truenas scale. It’s a great option, but you just have to make sure to pass the hdd controller PCI device through to the VM. This can either be the SATA controller on the motherboard if you can make that work, or a separate PCIe HBA.

Honestly I would get a pcie sata controller card. It is fairly inexpensive and doesn’t tie up the controller on the motherboard

Should I pass the whole controller? I have the Proxmox disk on the same controller, how do I do that?

You would need a separate controller.

Ouch…got it. Thanks

The LSI 9210 8i - IT mode is a great option and can be had for under $50.

Does it works with SATA drives and, most important: can it provides the single drives to the OS without RAID (since I would want to use a software RADI like RIADz)?

Yeah, so the IT mode flash makes it just a JBOD controller, which is what truenas wants. It works with SAS and SATA. You’d need SFF-8086 to SATA cables. (One cable per 4 drives)

Interesting, I’ll have a look on how to flash the IT mode. Thanks!

On eBay they all seems to be coming from China at about 30$ with cables (although they are the 8087 and not 8086), could they be clone? In Europe (where I live) they costs about 60€.

What’s the advantage to using proxmox and virtualizing TrueNAS in your use case?

I’m looking to setup a TrueNAS box mostly as a file server (I have a bunch of spare drives sitting around, so I can duplicate locally and then backup to a cloud provider), but also as a docker host.

(I’m also researching some setups for friends businesses with the VMware debacle - they have a year to migrate).

I have a lot of services. I use Ansible to manage many of them, so they’re all in one VM. I use Home Assistant, which works best when installed as a whole VM or on bare metal. For the remaining services that I have yet to set up with Ansible, I keep the services that need the GPU on one VM, and everything else on another. Finally, I have an LXC container that is my SSH entrypoint and Ansible management system.

I could technically use TrueNAS Scale as a hypervisor for all this, but Proxmox has a lot of quality-of-life features that make it a better hypervisor. I could use Proxmox for ZFS and shares, but TrueNAS has has a lot of quality-of-life features that make it a much better NAS, so I virtualize it.

Neat, thanks for the info.

Guess I need to read up on what Proxmox offers - I was focusing on TrueNAS as a simple all-on one, maybe Proxmox has something for me.

Running a Debian Bookworm hypervisor using KVM/QEMU with virt-manager for vms + Incus for lxc containers gives you a lot of freedom with how you use it.

edit: It also means you build your own hypervisor from parts - kinda like installing postfix/dovecot/mariadb/spamassassin instead of a packaged solution like mail-in-a-box. It takes more time and effort but I find I understand the underlying technologies better afterwards.

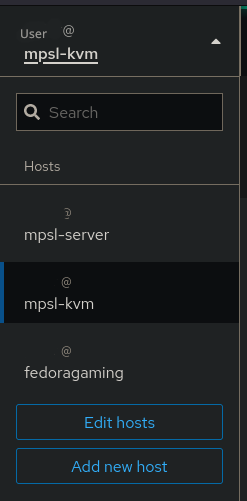

That’s something I like, just plain Debian with KVM. How is virt-manager compared to the Proxmox UI? Does it needs to be installed on a separate PC (Windows?)?

No problem running virt-manager on the hypervisor itself. You can also use https://cockpit-project.org with the cockpit-virtual-machines addon to manage kvm vms from https://machineip:9090

Cool! I think that I’ll give it a go! Does the cockpit needs to be installed directly on the host OS or…?

Yeah, you need to install cockpit on any linux you wanna manage using it, then you can use the ssh keys to setup so your cockpit session on the hypervisor gives you access to your vms too.

I recently switched from Proxmox to Debian Bookworm with Incus(LXD fork) as my primary setup, it’s been a pleasant experience. I also like the idea of using something like Cockpit to manage VMs though haven’t come to a need yet for a VM over a container. I’ll also point out that Incus can handle VMs as well.

Stéphane Graber, Project leader of Linux Containers is also on the fediverse and responds to questions often.

For the hypervisor I recommend either Proxmox or XCP-ng. XCP-ng is technically a better hypervisor, but I personally use Proxmox because I like the UI.

For the NAS OS, I use and recommend TrueNAS Scale. You can run Docker containers on it. All this being said, I’ve never used Unsaid so I don’t know how they compare.

Out of curiosity, why move from OMV? I was thinking about trying it out for a second NAS.

Out of curiosity, why move from OMV? I was thinking about trying it out for a second NAS.It’s probably a problem of not dedicating enough time to learn how it works, but I’ve installed a couple of time in the past years, but…I don’t like it much. It seems complicated to me (still, I probably don’t dedicate it enough time).

For the NAS OS, I use and recommend TrueNAS Scale. You can run Docker containers on itI’ll check it, thanks

Your reason to move from OMV is the same as mine for XCP-ng. It is supposed to be a better hypervisor, but I just did not like the UI at all. It doesn’t help that you have to host a small VM just to have a webGUI.

I had to build that small VM from source on a different machine because my XCP-ng install was refusing to set it up. Then I was able to move it over to the XCP-ng machine to self host.

I liked the UI and honestly I like the VM that keeps the web app separate from the hypervisor. But then it started looking like a massive pain to hotplug USB devices to the VMs, so I bounced.

I am trying Incus/LXD right now and enjoying it so far.

While you can do a lot of the stuff that PM does via Unraid and other tools, it’s all there in one spot. I love taking snapshots before upgrades, migrating machines between nodes live while I upgrade the nodes, having HA for my OPNsense and other important boxes, and the PBS backup system. I know you could do all this with other tools, but it’s damn convenient in PM and “just works”.

You can install a NAS vm in PM, just give it raw access to the disks you’re looking to use for data, and back them up independently. Don’t try to do something like overlay ZFS on ZFS.

Yeah I experimented with Truenas in a VM, it randomly dropped the pool. Do not do this.

If you can - separate host and storage. Run what ever hyper visor you like - Xcp-ng is also good. Any nas is good

I recently tried out Cockpit on top of plain old Debian and it was really nice. You can manage VMs and whatnot, but it’s quite a bit more lightweight than Proxmox IMO.

I recently tried out Cockpit on top of plain old Debian and it was really nice.

Now you should try LXD: https://lemmy.world/comment/6507871

You also could try OpenStack

Debian as OS; LCX/LXD/Incus for containers and VMs. BTRFS as filesystem. More: https://lemmy.world/comment/6507871

Incus better

Yes, no question there.

What do you mean with

LXD/LXD? Edit: Oh, I got it: LXD/LXC, I’m reading the post you’ve linkedMy bad, typo.

Nixos ❄️

Acronyms, initialisms, abbreviations, contractions, and other phrases which expand to something larger, that I’ve seen in this thread:

Fewer Letters More Letters ESXi VMWare virtual machine hypervisor HA Home Assistant automation software ~ High Availability LXC Linux Containers NAS Network-Attached Storage PCIe Peripheral Component Interconnect Express RAID Redundant Array of Independent Disks for mass storage SATA Serial AT Attachment interface for mass storage SSH Secure Shell for remote terminal access ZFS Solaris/Linux filesystem focusing on data integrity

9 acronyms in this thread; the most compressed thread commented on today has 15 acronyms.

[Thread #502 for this sub, first seen 11th Feb 2024, 09:35] [FAQ] [Full list] [Contact] [Source code]

What’s your thoughts on ZFS? If that’s something you want to do you could go buy a pcie Sata card and then pass it though to a TrueNAS VM.

I’ve never used it, but for what I’ve read, ai wonna try it. I think that I’ll buy a SATA/SAS controller and pass it to TrueNas/Proxmox. I thinking about installing TrueNas as a VM in Proxmox, what do you think about it?

That’s what I do. It works well and its kind of cool having a virtual NAS

Thanks for your feedback!

Do hit the TrueNas forums. It’s very important if you’re not a ZFS pro.

I had to learn quite a bit before I got my setup right.

The FreeBSD people can be… abrasive at times, let it roll off your back if they are. TN is one of my favorites.

Thanks for the suggestion, I will.!

Have you considered TrueNAS Scale?

Oh yeah True NAS Scale is all fun and games until you find out that the Wireguard container is permanently broken because Debian changed the default interface names years ago and they’re stuck with

eth0and other small but unfixable annoyances like that. To make things worse their container store depends on the charts repository that depends on another thing and 300 more repositories and fixing anything takes years.

deleted by creator